Today at Apple: Designing Sound

Try the app at designsound.app

Project

This collaboration with the Music Hackspace for a “Today at Apple” event at the Covent Garden store was a bit of dream project. For a long time, I had been thinking about doing a piece of synchronised music using all of the phones, tablets and laptops at an Apple Store, part inspired by Kyle McDonald’s project People Staring At Computers. When the opportunity to work directly with Apple came up, I had to jump on it.

The format of the event needed to have a talk and a participation session, so I had to work backwards from my original idea of a large spatial music performance to include these elements. I knew I would need a web app to do the performance, so decided that the app could have interactive elements for the participation session, and I could use the talk to introduce the ideas in the app.

An early idea was to allow the audience to participate in the performance using the app, but this felt less and less appropriate as the content of the performance changed from an instrumental piece of electronic music to a live performance of a pre-existing song. In either case I knew it would be a mistake to have the audience playing notes, as either they would create musical chaos, or I would have to severely limit their “contributions”. Instead I decided to make the app about sound design, as it is a less well known aspect of making music, and as a form of damage control. From this, I decided to make the talk about Timbre; the ‘sound’ of an instrument (an intuitive way to understand this is if you were to take a violin and a piano and play the same note at the same volume, all of the other differences in sound would be the timbre). The timbre of an instrument has a strong connection to the technologies of the time, so this felt like an appropriate venue to talk about this connection.

Talk

The talk was originally supposed to be about my work and how I had gotten to where I am (wherever that is!). I used the first half to give some background and talk about my related work, seguing into timbre, technology, and sound design.

Daniel Levitin said in an interview that “Pop musicians compose with timbre. Pitch and harmony are becoming less important”. This idea really stuck with me, and made me think about the relationship between technology and timbre. An example I used in the talk was of how the guitarists Richie Blackmore, Pete Townsend, and Big Jim Sullivan pushed Jim Marshall to make amplifiers that were louder than the Fender amps they were using, leading to the distorted “Marshall sound” that was integral to rock music.

“Pop musicians compose with timbre. Pitch and harmony are becoming less important.”

– Daniel Levitin

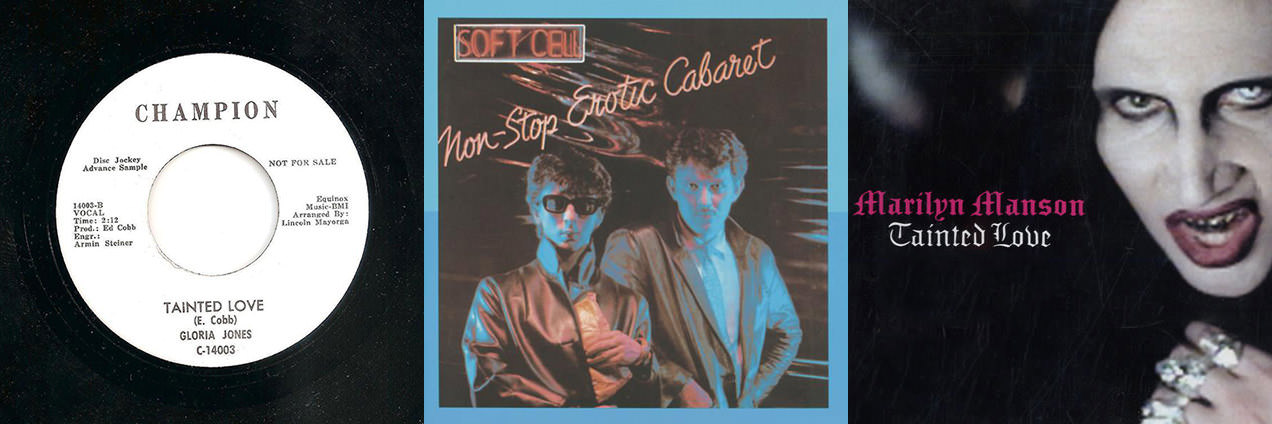

To emphasise the importance of timbre I showed how covers could drastically change the vibe of a song, and reflect the technology and culture of an era, by changing the sounds, while keeping the melody, harmony, and rhythm more or less the same;

Gloria Jones, 1965. Soft Cell, 1981. Marylin Manson, 2007.

Excerpts from three versions of "Tainted Love"

I also made the case that producers like Pharell Williams were pushing boundaries in music production through their creative choice of sounds, more than innovations in melody, harmony, or rhythm.

Full Talk

Sound Design App

(Read about the app in detail here)

To help people understand how a sound is made and why, for example, a violin sounds different to a piano, I created a bespoke app (designsound.app) to explain the different aspects of a synthesiser, and how they are used to shape a sound. I had great help from the wonderful Oscar Dub to get all of the software ready in the very short time frame.

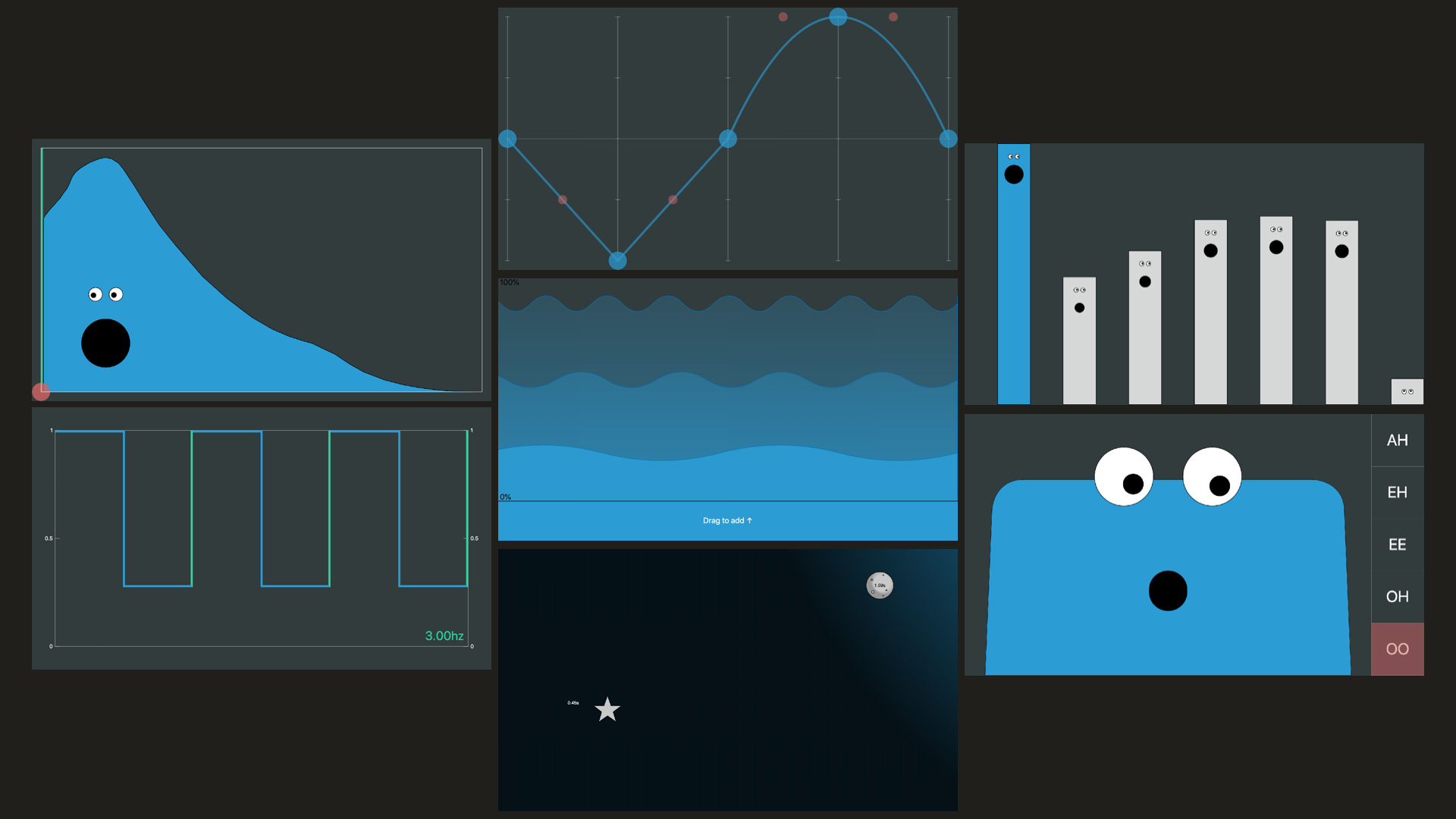

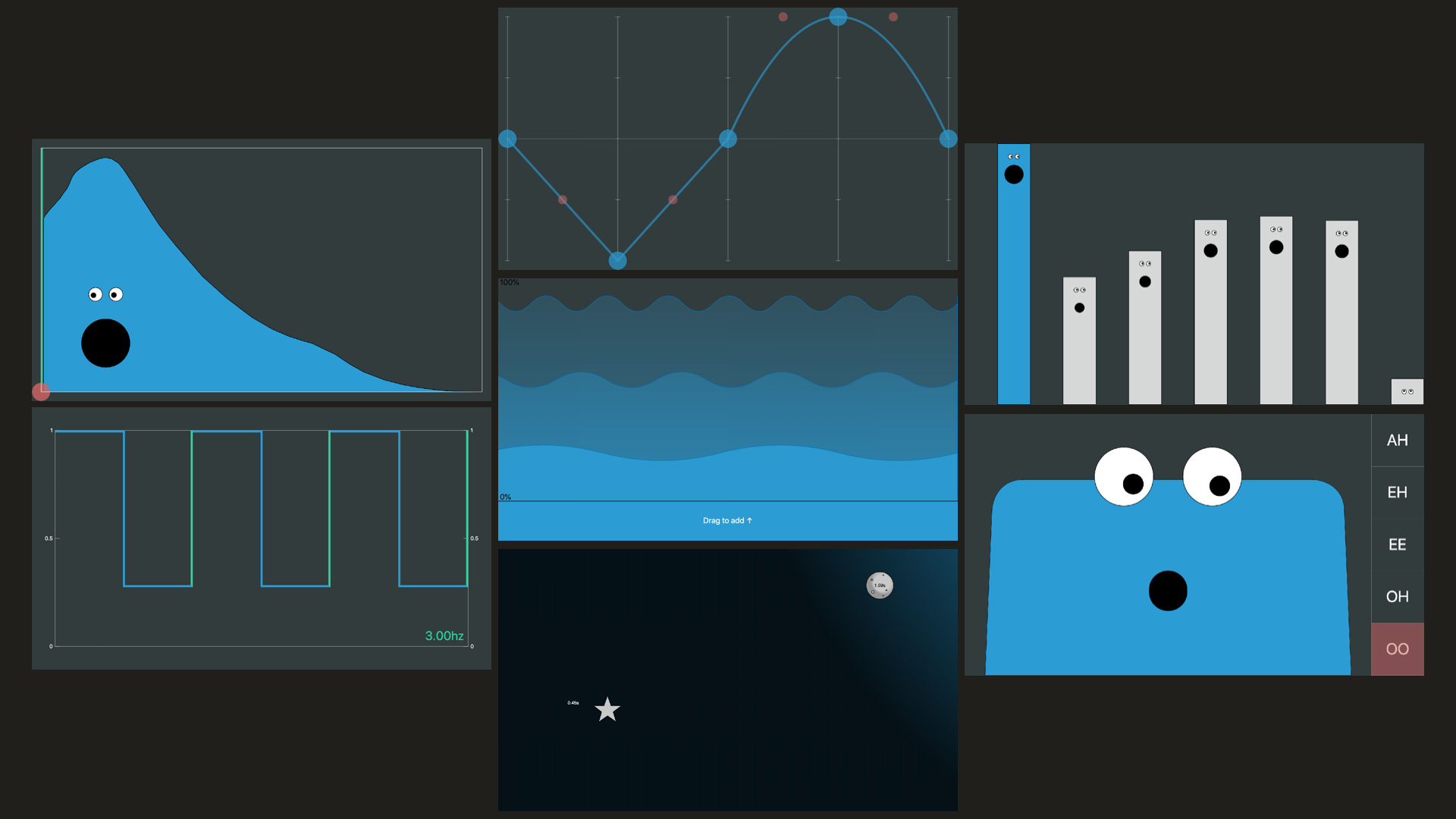

The app consists of seven widgets, designed to be “touch first” (as the audience were going to use iPads or their phones), that each control one aspect of the sound: the envelope, waveform, harmonics, a filter, an amplitude modulation LFO, chorus, and reverb + echo.

While the primary goal of these interfaces was for them to be approachable and understandable for people who had never used a synthesiser, I wanted the results to be valuable –and in some cases, even preferable– for people who are familiar with synths. One approach was to design them to be visually descriptive of their function, rather than using abstract sliders and knobs to control parameters, e.g. the LFO interface is a draggable, snappable, visualisation of its effect on the amplitude over time.

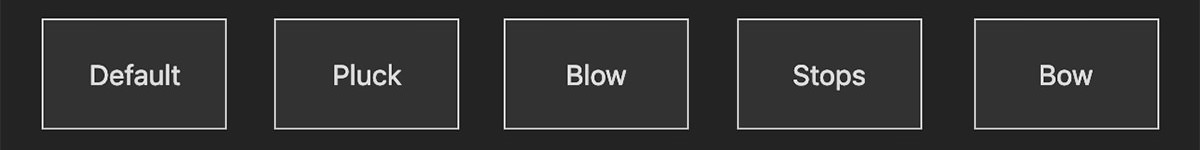

The playback controls on the app were heavily constrained, just three buttons to play either a single note, a chord, or a short melody. This was so that while people were playing with the app, the whole room would be in the same key, and so that the focus was on the sound design aspect of music making. Preset controls were also provided as starting points, and to demonstrate variety.

Playback Controls

Presets

Synchronised Spatial Audio

In building the playback engine for the performance, I created a system that let me trigger notes on all of the client devices, all in sync. These could either be samples, or a simple message to tell the synthesiser to play a specific pitch, with the sound the audience member had designed. In the event I did a short improvisation on my MIDI keyboard so we could hear the collective sound of the audience’s sound design.

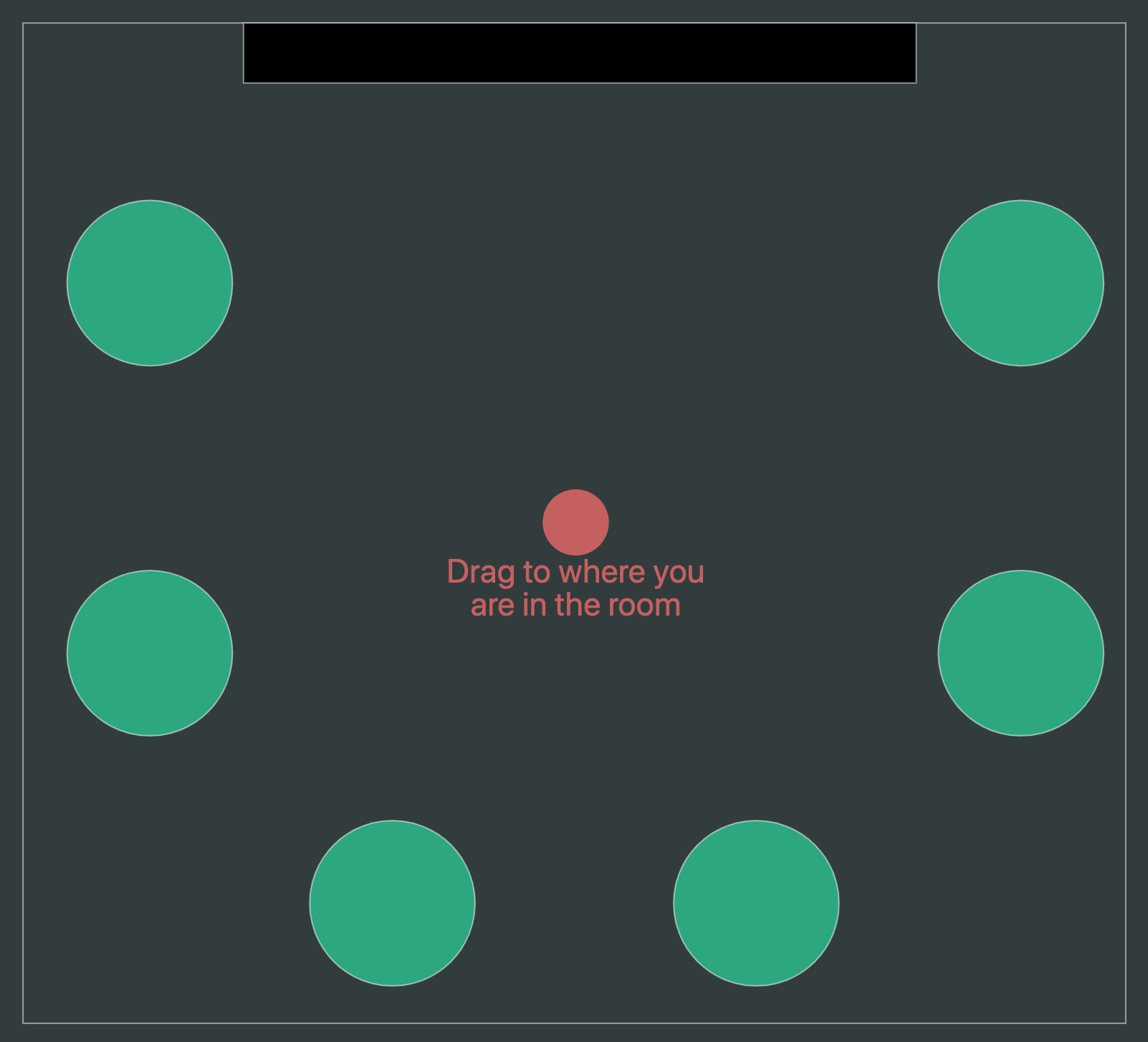

In order to create spatial audio effects, the audience were provided with a widget to set their location in the room, a cheap and reliable locating method!

Room Position Interface

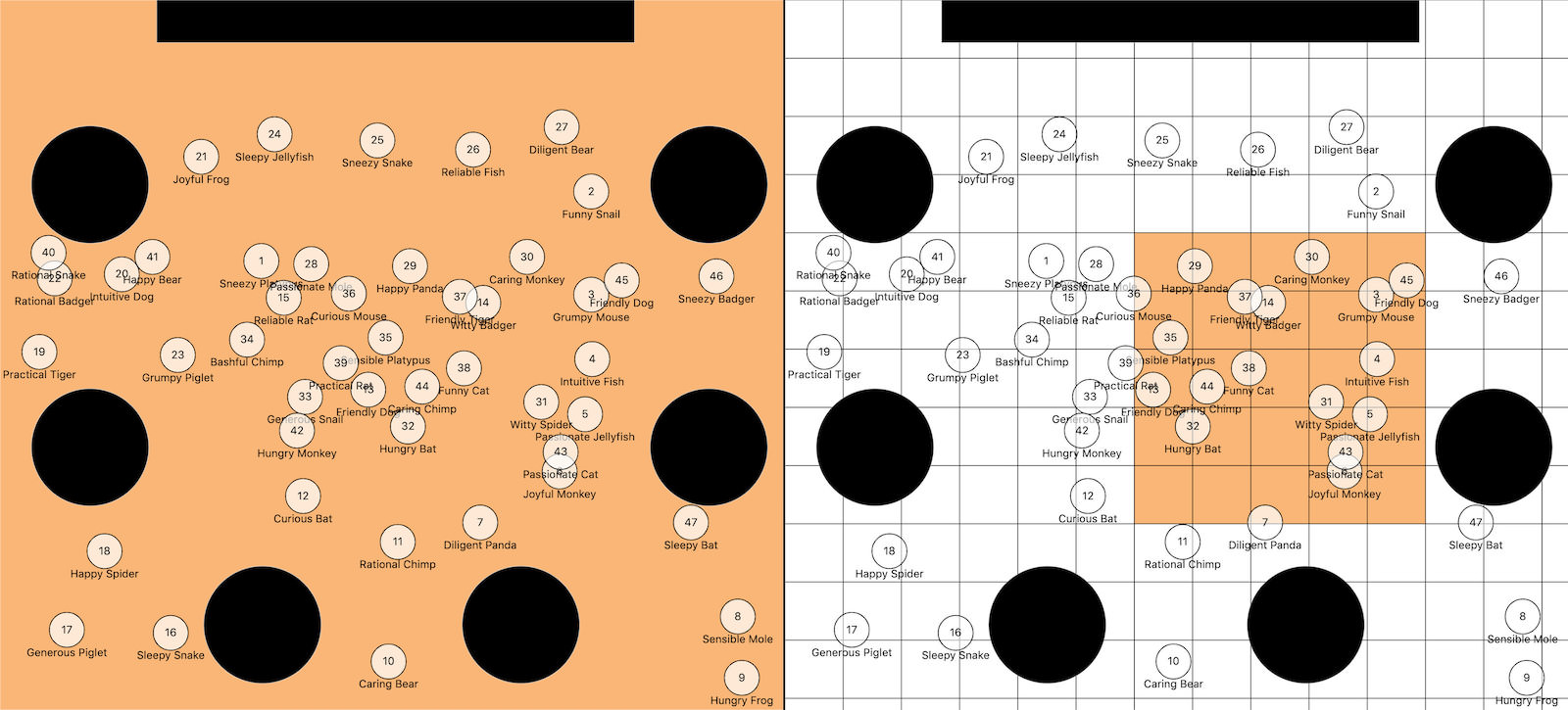

My view of the room

Performance with Chagall

Finally, Chagall performed her song Surely with all of the different instruments assigned to over 100 devices in the audience; only her voice and the bass were coming from the PA. The piece finished with Chagall singing a cappella, supported by a choir coming from the audience’s devices.

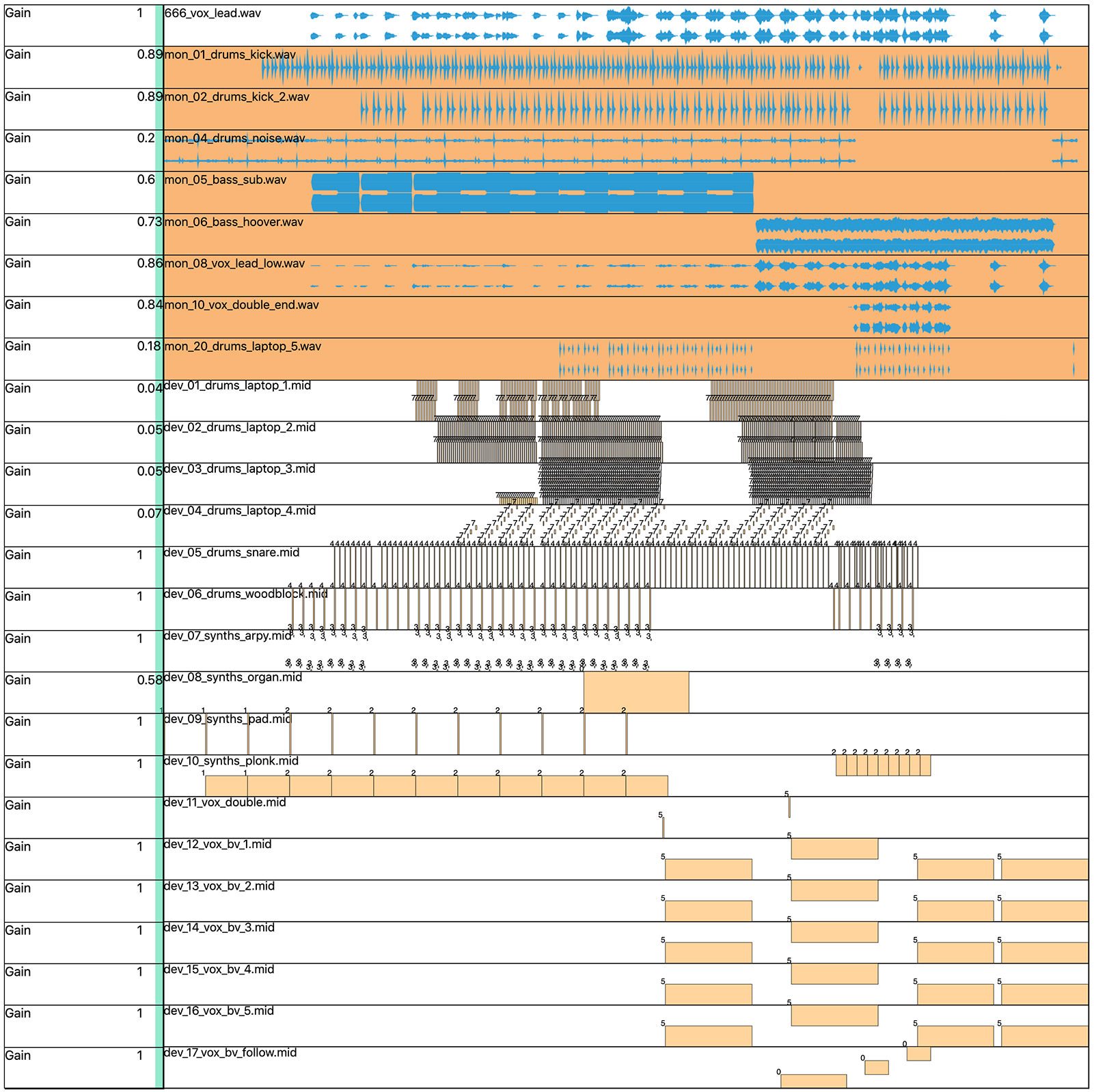

Playback Engine

I built a playback engine (using the same technology stack) that ran locally on my machine. This read a config file pointing to audio and MIDI files. To keep things simple, all audio had to be the same length (with leading silence) so they could all just be started at the same time. MIDI files were converted into data structures to be sent to a server that would distribute them to the clients. I used Logic Pro to set the MIDI channel data on each note; notes on channel 1 went to all clients, channel 2 to went to every second client, channel 3 to every third etc. This was a quick way of spreading the different instruments around the space. There was a constant update loop that used a three second lookahead to check for notes to send to clients. I took this real-time approach (rather than sending all notes in advance) as it minimised drift (think of a boat being off course by 1º) and re-used the live messaging code written for the MIDI keyboard triggering.

If I were to do this again, I would definitely consider just using a DAW (like Logic or Ableton Live) and using a virtual MIDI out to a small program that would send them to the server. I didn’t in this case as I was nervous about additional layers of latency, and wanting the ability to adjust the lookahead (which also had to offset the audio by the same amount).

Song playback engine, low budget DAW aesthetic.

Project

It was a very intense period of work, with only eight weeks to pull it off (and the first five were evenings and weekends as I was contracting). I enlisted the wonderful Oscar Dub to help out for the last two weeks, and thankfully it all came together really well. All in all we wrote over 13,000 lines of TypeScript, of which I’m a total convert. The user interfaces also made heavy use of Karsten Schmidt’s fantastic thi.ng umbrella libraries.

Rehearsals

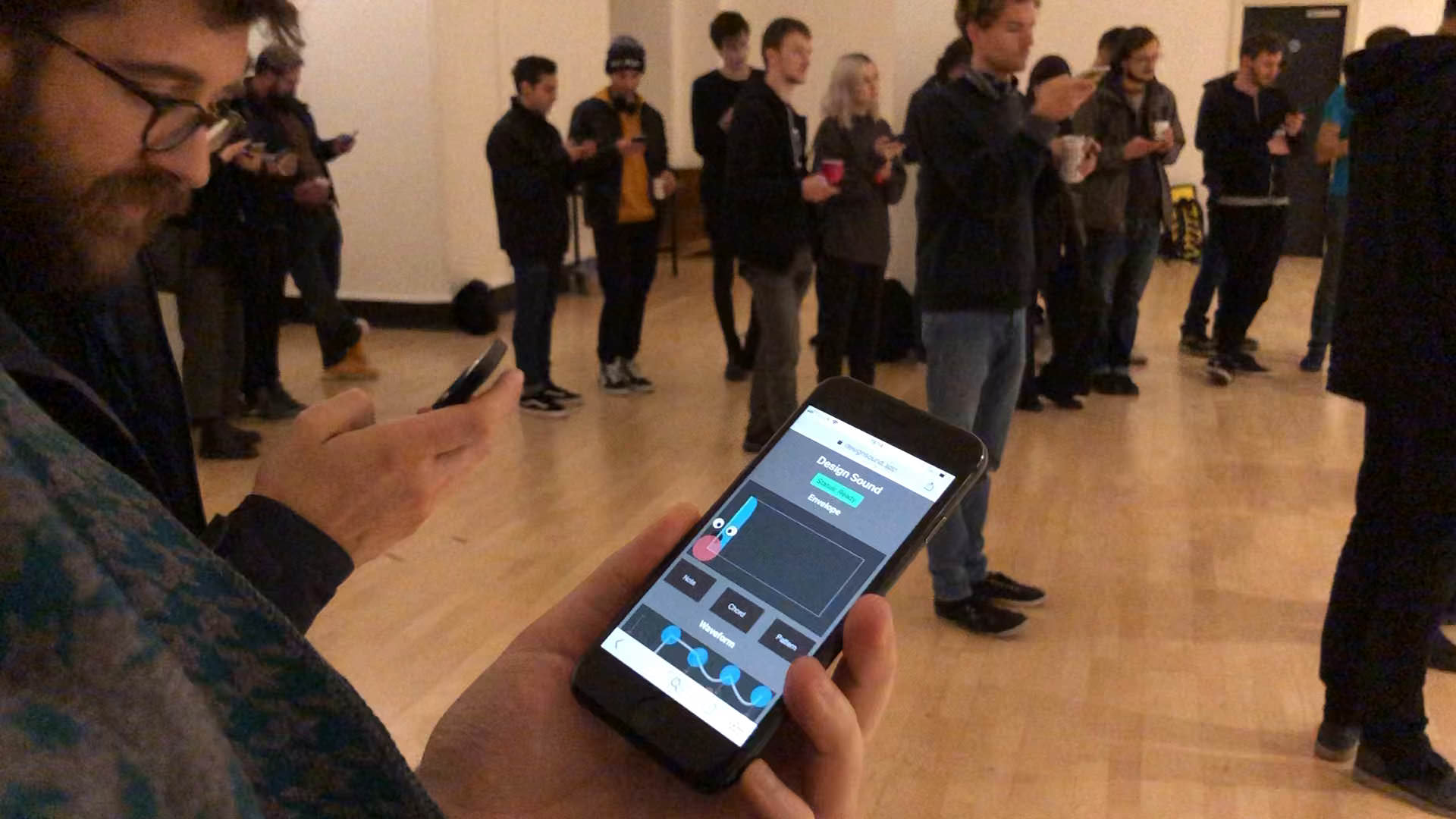

As well as doing a “100 browser tabs” test on the server, in order to root out any unknowns we wanted to test the system with as many real people and devices as possible. The Music Hackspace helped put on a low-key event at Somerset House, where we managed to get 50 or so people to do some patient testing in exchange for snacks and booze.

Rehearsal at Somerset House

This proved to be invaluable both in terms of finding bugs in the system and learning how to communicate with the audience. We quickly realised that we needed to be able to stop the audience from making any noise, so added a mode that would hide the note triggering UI on their devices.

We also had a rehearsal at the Apple Store early on the Sunday morning before the event, where we could try out the big screen, and get to hear the combined volume of the iPads for the first time.

Rehearsal at the Apple Store

Credits

Arthur Carabott: Designer, Programmer

Chagall: Music - chagallmusic.com // @chaggylalala

Oscar Dub: Programmer - @oscardub

Guillaume Couche: Video - guillaumecouche.com

Music Hackspace: Production - musichackspace.org // @musichackspace

Thanks

Leslie Nooteboom, Ross Cairns, Sam Iliffe, Nat Martin, Raunaq Bose, Karsten Schmidt, Robert Spring, Matt Hall, James Bull, Tadeo Sendon, Harry Murdoch